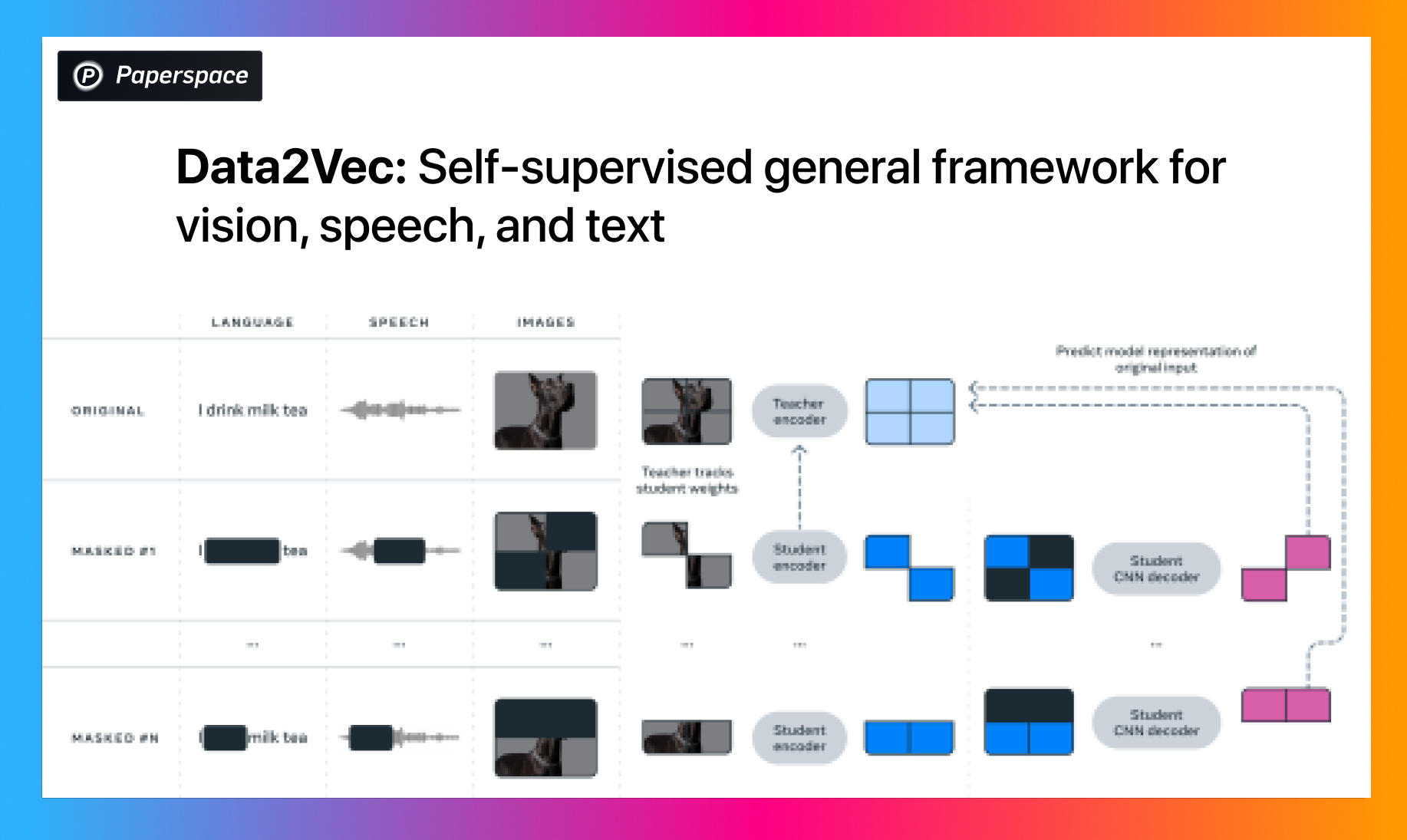

They believe that Data2Vec is a first step towards a general self-supervised learning paradigm.ĭata2Vec uses the concept of masked predictions but instead of doing it on modality dependent symbols (subword units for text, visual tokens for images or abstract speech units) the model tries to predict contextualized latent representations. The authors highlight the fact that, while the concept of self-supervised learning is now common to various modalities, the actual algorithms and objectives are widely different and often modality dependent. 1: Data2Vec, a ‘modality agnostic’ extension of encoder-only modelsĭata2Vec ( 9) by Meta proposes a unique training procedure to learn transformer models from either speech, images or textual data. Their multilingual counterparts, mBART ( 7) and mT5 ( 8), appeared shortly afterwards. denoising) the original text from a noisy version corrupted with a set of noising functions. These transformer-based sequence-to-sequence models basically consist of an encoder and an autoregressive decoder. However, for text, encoder-decoder models such as BART ( 5) and T5 ( 6) were introduced in 2020. Until very recently, pre-trained models in speech were encoder-only models. Both wav2vec2.0 and HuBERT are now considered essential to extract good representations and/or to initialize speech encoders for tasks such as automatic speech recognition (ASR). In 2021, HuBERT ( 3) kept a similar encoder but leveraged cross-entropy loss (like BERT ( 4)) instead of contrastive loss and scaled to 1 billion parameters. In 2020 the same group introduced wav2vec2.0 ( 2) which is based on the CPC idea, but where discrete speech units are used as latent representations which are fed to a transformer architecture to build contextualized representations.

In speech, the models are pre-trained through resolving pseudo-tasks such as contrastive predictive coding (CPC) which distinguishes a future speech frame from distractor samples using latent representations from a convolutional architecture ( 1). Self supervised pre-training has been successful for both speech and text. Brief background on self-supervised learning for both speech and text In this blog, we review 3 recent papers on the topic by Meta (Data2Vec), Microsoft and academic partners (SpeechT5) and Google (mSLAM), and discuss how these multimodal speech-text pre-trained models are used to build more holistic speech systems and what’s required to extend their capabilities and make them more accessible.

Multimodal pre-training is a potential game changer in spoken language processing.

0 kommentar(er)

0 kommentar(er)